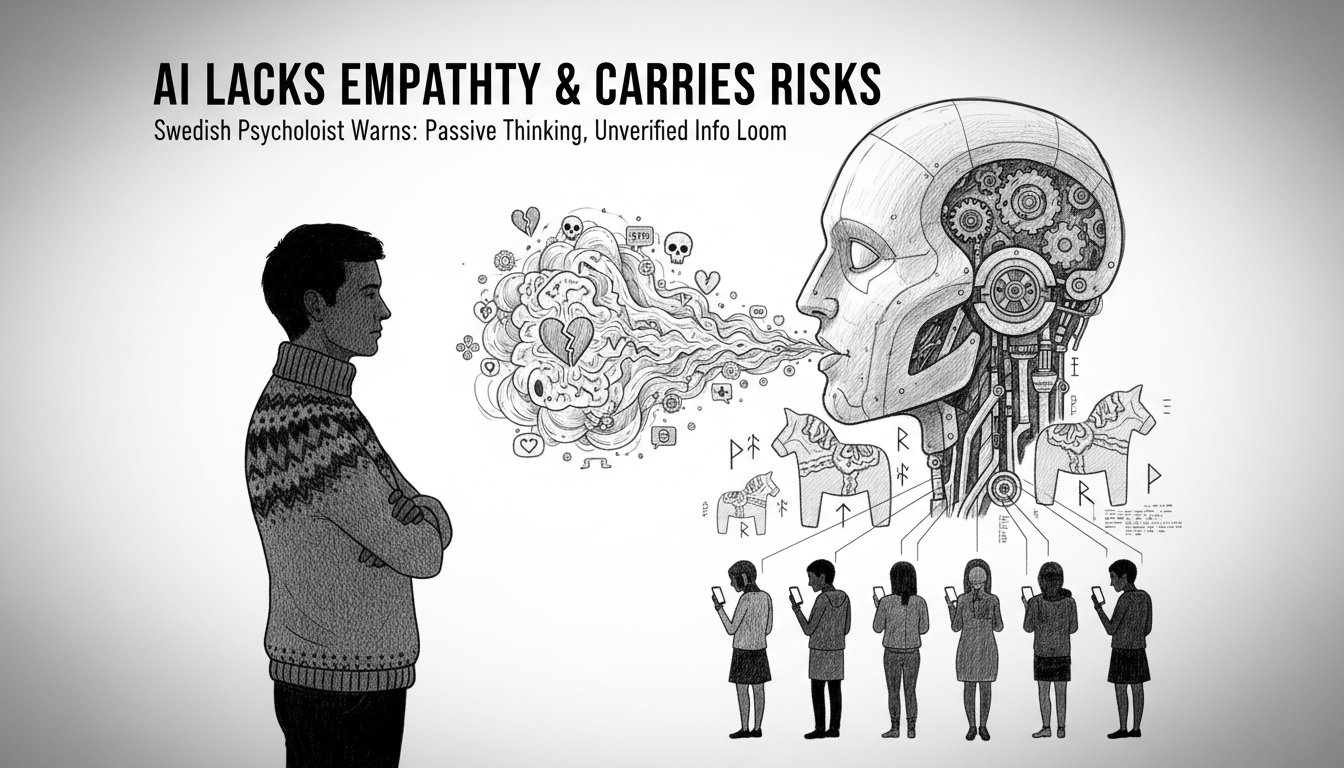

Psychologist Warns AI Lacks Empathy and Carries Risks

A Swedish psychologist warns that AI chatbots lack empathy and can reinforce negative mental patterns. One in five Swedes now uses AI for health advice, raising concerns about passive thinking and unverified medical information. The convenience of constant AI access comes with psychological risks that other nations should watch closely.

More Swedes now view AI as their friend. They turn to chatbots that always respond and never leave. Yet replacing human contact with artificial intelligence brings serious risks. It might even prove dangerous.

Psychologist David Waskuri said AI lacks empathy and can reinforce negative patterns. One in five Swedes now uses AI instead of traditional search engines. Another one in five AI users asks health questions to chatbots like ChatGPT.

A new Swedish Internet Foundation report reveals this trend. People under 35 dominate these behaviors. They ask AI for diagnoses and help interpreting medical records. They seek advice about test results, X-rays, medications, and mental health struggles.

Waskuri works as a licensed psychologist at Sveapsykologerna. He explained the psychological dangers. Over-reliance on AI makes people passive in their thinking. Users risk accepting AI responses without questioning them.

The technology mirrors users' own thoughts back at them. This reflection can strengthen negative mental patterns. Several patients told Waskuri they turned to AI chatbots when feeling unwell.

Sweden leads Europe in technology adoption. Its citizens often embrace digital solutions early. This creates unique opportunities but also novel psychological challenges. The convenience of constant AI availability comes with clear trade-offs for mental wellbeing.

Can machines ever provide genuine emotional support? The answer appears to be no. Real human connection involves shared understanding that algorithms cannot replicate. Sweden's experience offers early warnings for other nations following similar technological paths.